ABOVE: © ISTOCK.COM, PESHKOVA

August 14, 1991, was a seemingly uneventful day in the history of science. But on that day, a new model of scientific publishing—the preprint server—was birthed, and the three decades since have seen the phenomenon grow into a substantial avenue of information dissemination.

For around a year prior to this date, astrophysicist Joanne Cohn, then at Princeton University, had been maintaining an email list that she used to share unreviewed manuscripts, mostly on the topic of string theory, among a group of theoretical physicists. In the summer of 1991, Cohn chatted with physicist Paul Ginsparg at a workshop held at the Aspen Center for Physics. Ginsparg, who had recently taken a position at Los Alamos National Laboratory, had been part of Cohn’s original email list and asked her about automating mailings to her list. According to Cohn, he offered to work on a system that would facilitate the sharing of the preprints in her archive and got to work on some sample scripts immediately after their conversation. Two months later, on August 14, the Los Alamos ArXiv was launched as a system that maintained a central repository for papers distributed via automated emails to somewhere in the neighborhood of 180 researchers in more than 20 countries.

Since then, the Los Alamos ArXiv evolved from a repository and listserv to an FTP file server to a website, arXiv.org. It also grew to encompass more than just manuscripts on theoretical physics, including papers in astronomy, computer science, mathematics, and quantitative biology. That website eventually inspired the establishment of bioRxiv, a biology-focused preprint repository, ChemRxiv, a preprint archive for chemistry studies, and most recently medRxiv, which traffics in medical research. arXiv now attracts about 16,000 submissions per month, it states on its website; it now contains almost 2 million manuscripts, and monthly submissions to bioRxiv, ChemRxiv, and medRxiv are in the hundreds or thousands.

This trend was greatly accelerated by COVID-19, with the three biomedicine-focused preprint servers getting flooded with manuscripts on the viral scourge that mushroomed into a pandemic in early 2020. According to statistics tracked by the National Institutes of Health, about 20 percent of the research output surrounding COVID-19 has come in the form of preprints. It seemed as though preprint servers were ready for their close-up, displaying their main benefits over conventional science publication: a quicker path from generating findings to sharing them with the broader scientific community and the public—and a circumvention of the not-entirely-wart-free process of peer review. In the face of an overwhelming appetite for insight into SARS-CoV-2, preprint servers represented a new pipeline that could accelerate science in a crisis situation, when time was of the essence and newly garnered knowledge might help shorten the lifespan of a hugely disruptive pandemic.

But this idealized vision of a streamlined communication of research findings failed to come to fruition; instead, misleading results circulated widely and questionable therapies were accepted as panaceas. In this issue, toxicologist and emergency medicine physician Michael Mullins details the case of hydroxychloroquine (HCQ), a drug that clinicians have prescribed for years to treat rheumatic diseases. In March 2020, as COVID-19 swept around the globe, researchers posted a preprint espousing HCQ’s utility in treating SARS-CoV-2 infection in medRxiv. Reports of the hopeful development spread through the popular press, the US stockpiled the drug, and prescriptions spiked by April. But within weeks, more-detailed analyses pointed to the emerging fact that HCQ was not effective against COVID-19. Could lives have been saved or health rescued if the world had not seized on the mistaken promise of a drug that turned out to be ineffectual? Perhaps. Was that preprint at fault? Perhaps. “Just as ‘news is the first rough draft of history,’ preprints may become the first rough draft of science,” writes Mullins, of Washington University School of Medicine in St. Louis.

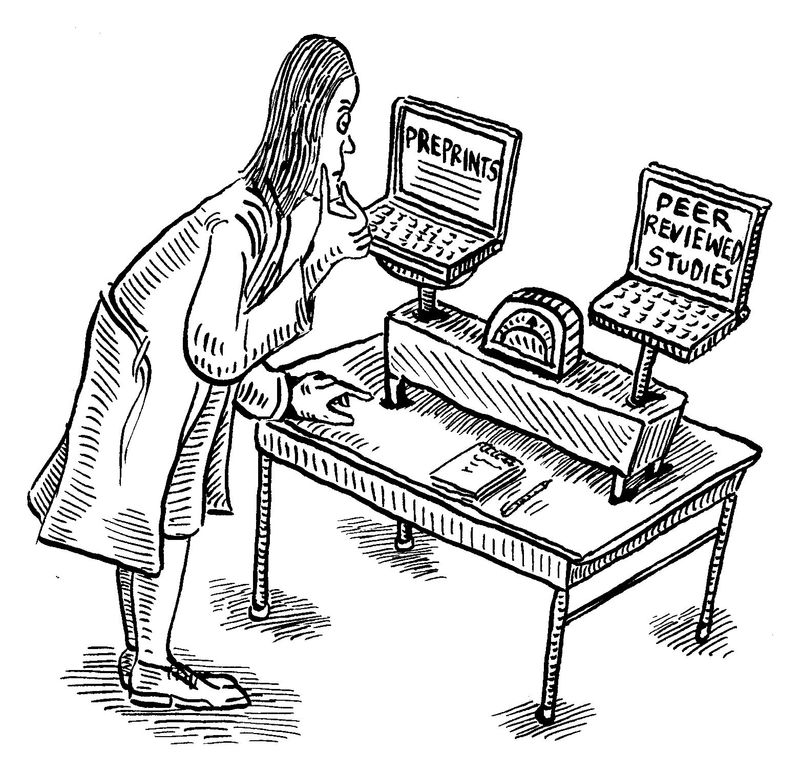

Journalism is a key link in the dissemination of scientific discovery and, ultimately, its translation to clinical practice. As is the case with all research, peer-reviewed or not, the proper framing of science is essential to broadcasting trustworthy, sound, and potentially actionable research findings. With preprints, the job is even tougher, as the findings in question have not had to pass any vetting before becoming public. The data or conclusions in preprints aren’t shoddy or misleading by default. But absent the quality assurance layer of peer review—even with all of the shortcomings and pitfalls that The Scientist and others have explored—preprints run a higher risk of putting forth results that are less than reliable.

This simply means that journalistic outlets, members of the public, researchers, politicians, and other interested parties must be extra vigilant when considering findings reported in preprints. Mullins, who is also the editor in chief of open-access journal Toxicology Communications (and my next-door neighbor), and other scientists offer several suggestions for this proper contextualization of preprints on the part of the research community. Giving preprints DOI numbers that expire after a set time instead of the permanent DOIs they now receive, referring to them as an “unrefereed manuscripts,” or emblazoning each page of preprints with a warning label that alerts readers to the unreviewed nature of the paper may well help to present preprints as those first drafts of science.

On the journalism side, it’s imperative that newsrooms at media behemoths and niche publications alike adopt policies that strike a balance—between rapidly communicating valuable information and disseminating well-founded scientific insights—by appropriately contextualizing and vetting findings reported in preprints. At The Scientist, we have done just this, and our policies regarding preprints are posted on the Editorial Policies page of www.the-scientist.com. Please head there to review the specifics, and feel free to share your thoughts and comments on our social media channels. In my opinion, preprints and the servers that host them do still harbor a promise and utility that may help when the next global health emergency comes knocking. The scientific community, the public, the press, and the political sphere must adjust our views and treatment of these first drafts of science so that we avoid the pitfalls and reap the benefits of a more direct communication of research findings.

Bob Grant